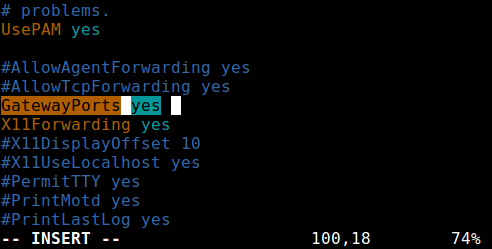

In my case, instead of creating a tunnel from Docker host machine to remote machine using ssh -L, I was creating remote forward SSH tunnel from remote machine to Docker host machine using ssh -L. In case anyone hits this problem but with a slightly different setup with the SSH tunnel, here are my findings. I agree with that answer should be the accepted answer. I'm pretty sure this will work regardless of the OS used (MacOS / Linux). The PHP container started talking through the tunnel without any problems: remember to put your public key inside that host if you haven't already: ssh-copy-id project-postgres-tunnel # uncomment if you wish to access the tunnel on the host Image: cagataygurturk/docker-ssh-tunnel:0.0.1 Instead of trying to allow a container to talk to the host, I simply added another service to the stack, which would create the tunnel, so other containers could talk to easily without any hacks.Īfter configuring a host inside my ~/.ssh/config: Host project-postgres-tunnelĪnd adding a service to the stack: postgres: The most important thing was I didn't want to use the host mode: security first. Instead of doing voodoo magic with iptables, doing modifications to the settings of the Docker engine itself I came up with a solution and was shocked I didn't thought of this earlier. I spent hours trying to find a solution (Ubuntu + Docker 19.03) and I failed. My case was as follows: I had a PostgreSQL SSH tunnel on my host and I needed one of my containers from the stack to connect to a database through it. So within your docker containers just channel the traffic to different ports of your docker0 bridge and then create several ssh tunnel commands (one for each port you are listening to) that intercept data at these ports and then forward it to the different hosts and hostports of your choice. Within your ssh tunnel command ( ssh -L port:host:hostport] the port part of the bind_address does not have to match the hostport of the host and, therefore, can be freely chosen by you. a docker container needs to connect to multiple remote DB's via tunnel), several valid techniques exist but an easy and straightforward way is to simply create multiple tunnels listening to traffic arriving at different docker0 bridge ports. When dealing with multiple outgoing connections (e.g. Now traffic being routed through your docker0 bridge will also reach your ssh tunnel :)įor example, if you have a "DOT.NET Core" application that needs to connect to a remote db located at :9000, your "ConnectionString" would contain "server=172.17.0.1,9000. In your containerized application use the same docker0 ip to connect to the server: 172.17.0.1:9000. Side note: You could also bind your tunnel to 0.0.0.0, which will make ssh listen to all interfaces. Without setting the bind_address, :9000 would only be available to your host's loopback interface and not per se to your docker containers. Now you need to tell ssh to bind to this ip to listen for traffic directed towards port 9000 via ssh -L 172.17.0.1:9000:host-ip:9999

You will see something like this: docker0 Link encap:Ethernet HWaddr 03:41:4a:26:b7:31

by binding ssh to the docker0 bridge) instead of exposing your docker containers in your host environment (as suggested in the accepted answer).įor this to work, retrieve the ip your docker0 bridge is using via: ifconfig In your case, a quick and cleaner solution would be to make your ssh tunnel "available" to your docker containers (e.g. Using your hosts network as network for your containers via -net=host or in docker-compose via network_mode: host is one option but this has the unwanted side effect that (a) you now expose the container ports in your host system and (b) that you cannot connect to those containers anymore that are not mapped to your host network. I also tried mapping the ports via docker run -p 9999:9000, but this reports that the bind failed because the host port is already in use (from the host tunnel to the remote machine, presumably).ġ - How will I achieve the connection? Do I need to setup an ssh tunnel to the host, or can this be achieved with the docker port mapping alone?Ģ - What's a quick way to test that the connection is up? Via bash, preferably. 2 but there was no perceived communication, even when doing

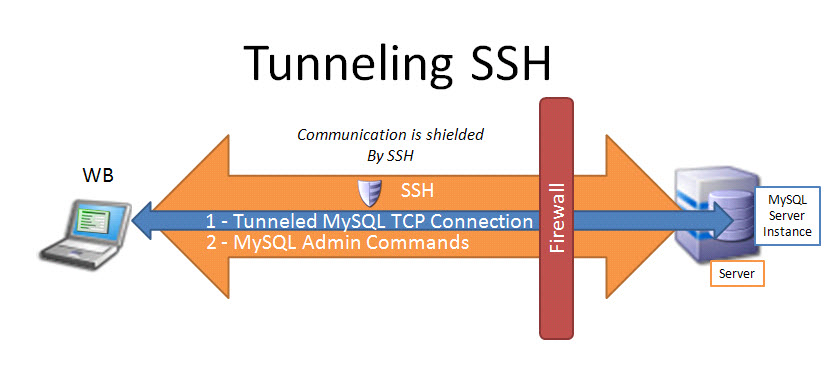

To check wether the port mapping was on, I triedįollowing this, parag. I tried tunneling from the container to the host with -L 9000:host-ip:9999, then accessing the service through 127.0.0.1:9000 from within the container fails to connect. I need to access that remote service via the host's tunnel, from within the container. Using ubuntu tusty, there is a service running on a remote machine, that I can access via port forwarding through an ssh tunnel from localhost:9999.

0 kommentar(er)

0 kommentar(er)